Groundwater levels across US affected by Mexico earthquake

Using ggplot2 to inspect water levels affected by earthquake.

What happened?

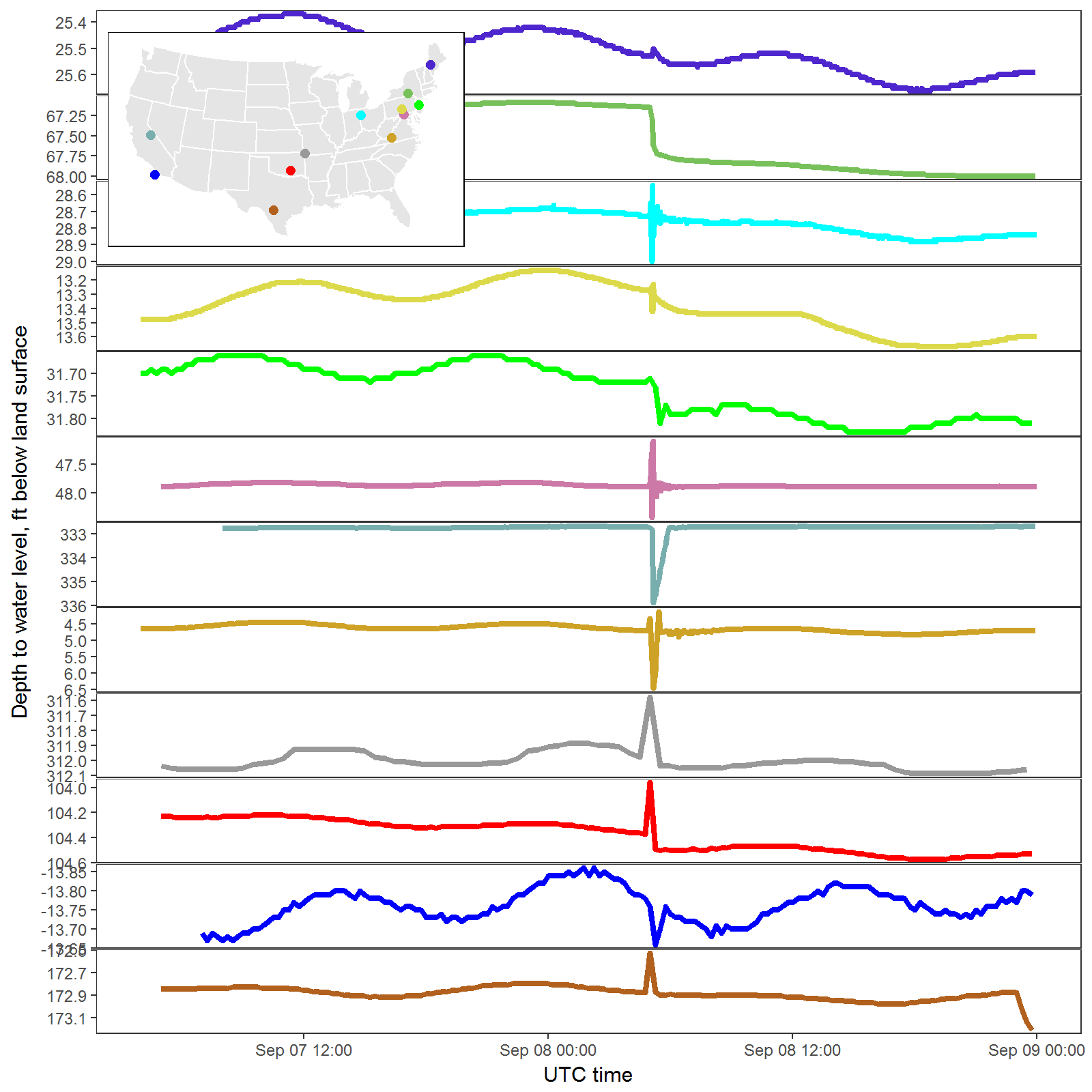

A magnitude 8.1 earthquake was measured in the Pacific region offshore Chiapas, Mexico at 04:49:21 UTC on September 8th 2017.

Working in the Water Mission Area for the USGS, our Vizlab Team was already hard at work polishing up water visualizations for the devastating Hurricane Harvey , as well as creating a new visualization for Hurricane Irma . That is to say, the resources available for a timely visualization were already spent.

By 10 am on Friday however, a team of USGS groundwater scientist had realized that the affects of the earthquake were being observed in groundwater water level measurements across the United States, and were collecting a list of sites that clearly showed the phenomena. It was interesting to view the data directly from the National Water Information System NWIS pages.

While the NWIS site allowed a very easy way to observe the data, it was not quite as dramatic and interesting since it was only plotted in local time zones (so the earthquake peaks were not lining up), and the y-axis scales were so different, they could not be plotted on a single graph:

The challenge was on: could we very quickly get a graph out that aggregates this data, unifies the time zones, and visualize the information in an intuitive way for the general population? This post documents the process of rapidly creating the plot in order to get the information out to social media in a timely manner. The final plot could certainly be improved, but with the limited resources and time constraints, it still managed to be a fun and interesting graphic.

Aggregate USGS Data

Without question, the hardest part is to find the right data. Luckily for me, this was already done by 10am by the groundwater scientists Rodney A. Sheets, Charles Schalk, and Leonard Orzol (contact information is provided at the end of this post). They provided me with a list of unique site identification numbers from groundwater wells across the nation, and a “parameter code” that represents water levels measurements.

Using the dataRetrieval R package, it then becomes trivial to access USGS data and import it into the R environment.

The following code retrieves the data:

By default, all the data in this data frame comes back in the “UTC” time zone. So, we’ve already crossed one of the hurdles - unifying time zones.

dataRetrieval attaches an attribute siteInfo to the returned data. The information in that attribute includes station names and location. We can extract that information and sort the sites from south to north using a bit of dplyr.

Now, we can do a bit of filtering so that we will only plot the 24 hours of on September 8th (UTC). Also, we can use the south_to_north data frame to create ordered levels for the sites:

Plot Explorations

Since the y-axis is quite different between sites, it seemed to make the most sense to use ggplot2’s faceting. The first iteration was as follows:

Initial plot of water levels

The site_no column (the unique site identification numbers) are not terribly useful for a general audience, so we decided to eliminate those facet labels on the right and add a map as a legend. Also, we wanted to use consistent colors across the map and lines.

So, first, let’s map some colors to the unique site_no:

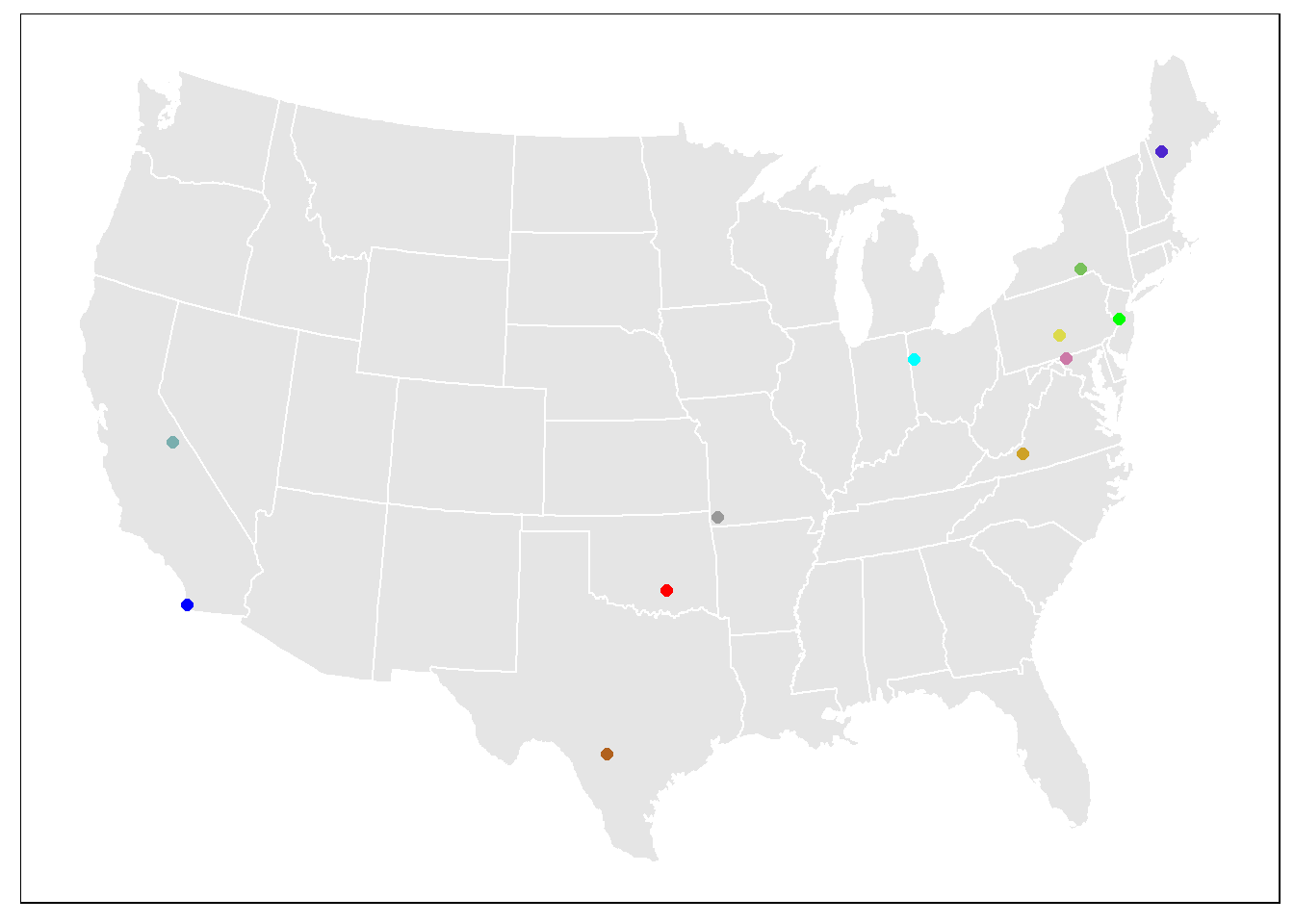

Map Legend

The following code was used to map the sites to a basic lower-48 map of the United States:

Site locations on US map

The initial line graphs needed to be updated with those colors and dropping the facet labels:

Finally, the graphs were combined using the viewport function from the grid package:

## png

## 2

Water levels in US affected by Mexico earthquake

What does the plot mean?

While this post is primarily focused on the process used to create the plot, far more effort was expended by others in understanding and describing the data. The following information was released with the graph, initially as a Facebook post USGSNaturalHazards :

Did you know? We often see a response to large (and sometimes not so large) earthquakes in groundwater levels in wells. The USGS maintains a network of wells for monitoring various things like natural variability in water levels and response to pumping and climate change across the U.S. The well network can be seen here: https://groundwaterwatch.usgs.gov/ The M8.1 earthquake that took place 9-8-2017 in the Pacific region offshore Chiapas, Mexico was observed across the U.S. in confined aquifer wells within the network. The wells in this plot are available from the National Water Information System (NWIS) web are from: TX, CA (southern), OK, MO, VA, CA (central), MD, NJ, PA, OH, NY and ME (from south to north). This is just a small sampling of the wells. USGS hydrologists are poring over the data from other states to see what the full extent of response might have been.

Read about how the 2011 earthquake in Mineral, VA affected ground water levels here: https://doi.org/10.1130/2015.2509(01)

Find more information about groundwater response to earthquakes. https://earthquake.usgs.gov/learn/topics/groundwater.php

The frequency of data in these plots varies – some are collected every minute, some every 15 minutes, and some hourly – depending upon the purpose of the monitoring, giving different responses to the earthquake. The magnitude of the response depends on the local geology and hydrology, when the data point was collected, and when the seismic wave hit the aquifer that the well penetrates.

Have Questions on Groundwater?

Related Posts

Calculating Moving Averages and Historical Flow Quantiles

October 25, 2016

This post will show simple way to calculate moving averages, calculate historical-flow quantiles, and plot that information. The goal is to reproduce the graph at this link: PA Graph .

Reproducible Data Science in R: Iterate, don't duplicate

July 18, 2024

Overview This blog post is part of a series that works up from functional programming foundations through the use of the targets R package to create efficient, reproducible data workflows.

Reproducible Data Science in R: Writing better functions

June 17, 2024

Overview This blog post is part of a series that works up from functional programming foundations through the use of the targets R package to create efficient, reproducible data workflows.

Reproducible Data Science in R: Writing functions that work for you

May 14, 2024

Overview This blog post is part of a series that works up from functional programming foundations through the use of the targets R package to create efficient, reproducible data workflows.

Hydrologic Analysis Package Available to Users

July 26, 2022

A new R computational package was created to aggregate and plot USGS groundwater data, providing users with much of the functionality provided in Groundwater Watch and the Florida Salinity Mapper .