Reproducible Data Science in R: Writing better functions

Write functions in R that run better and are easier to understand.

What's on this page

Overview

This blog post is part of a series that works up from functional programming foundations through the use of the targets R package to create efficient, reproducible data workflows.

Where we're going: This article explores techniques for improving functions written in R using an example function that fetches stream gage site data using dataRetrieval (deCicco et al. 2024). First, we'll underscore the importance of function names and how to write a good one. Then we'll go through writing intuitive function inputs (that is to say, arguments) and outputs (or return values). Lastly, we will discuss the importance of function documentation to guide future users.

Audience: This article assumes that you know how to write a function in R - if not, see Reproducible Data Science in R: Writing functions that work for you. Perhaps you are a novice R user -- you have code that runs but maybe not as efficiently as it could.

This article builds on that momentum of the previous blog post , heavily drawing upon Tidy design principles to improve our functional programming skills. With a little time invested in how we write functions, we can save ourselves and our collaborators a lot of time trying to decipher our code by focusing on intuitive and user-centered design. There are a lot of ways to do this, but this article contains a couple of the most straightforward and important aspects of writing useful and usable functions.

Function names: Our function’s first impression

Some time should be spent considering the name of your function: believe it or not, names can have a significant impact on the usability of your function. To the extent practicable, your function name should:

- Be descriptive yet concise

- Be evocative but not distracting

- Favor explicit and expressive names over short and vague names.

See The tidy tools manifesto for some relevant philosophy.

Let’s say we want to write a function that uses dataRetrieval::readNWISsite()

to download site data and filters that data frame to a few specific columns needed for our analysis. Here are some examples of possible names:

return_gage_sites_and_subset_columns(): Too longprocess(): Too common (at least 71 packages on CRAN include a function calledprocess())fetch(): Too vaguefetch_gage_info(): Ahh, just right

Note that in all the above examples, the names begin with a present tense verb. This is also helpful because it helps the user understand the purpose by giving action to the function - after all, a function performs some action (verb) to some object (noun). You may also notice the use of snake_case

. Naming conventions are not really enforced in R as long as your name is syntactic

; however, there are some conventions that point us toward the use of snake_case in our function names.

lowerCamelCaseandUpperCamelCaseare used to define other types of objects and classes in the R universe (see S4 generic objects and R6 if you’re curious about these data structures)period.caseis often confusing to Python users because in Python dots are used to access an object’s methods and attributes .

There are many counter examples of these naming convention suggestions by R functions, including base R functions: anyNA()

uses lowerCamelCase, is.na()

uses period.case, as.Date()

uses lowerCamelCase and period.case, Encoding()

is capitalized, but enquote()

is not. They are all over the place! It’s too late to try and standardize all of those functions built in the past, but it seems useful to pick a convention for ourselves moving forward. While snake_case is not universally suggested for function names (e.g., Google’s R Style Guide

), it is often recommended, including by some voices in the R community (example

), including The tidyverse style guide

.

Writing multiple functions?

When writing several functions that perform similar work, it can be useful to use a consistent naming scheme. Having function names that all look or sound similar clues the user into how they are related. For example, making them all imperative tense verbs such as most of the functions in the terra or dplyr packages. Another technique is using a prefix such as the str_ for functions in stringr or geom_ by functions in ggplot2.

For further reading about function names, see:

- Function names | Tidy design principles

- 2 Syntax | The tidyverse style guide

- 25.2 Functions: Style | R for data science

- Consistent naming conventions in R | R-bloggers

Arguments: Make the interface intuitive

We can all agree that the purpose of your function is to be used, not to be confused (am I right? 😏). You can reduce the cognitive burden required to use a function with an intuitive interface; by interface we mean how the user interacts with the function, primarily via the arguments.

Let’s examine a function that is not terribly intuitive.

fetch_gage_info <- function(y, x) {

dataRetrieval::readNWISsite(y)[, x]

}

Can you tell what’s going on here? After a minute and maybe reading the help documentation (?dataRetrieval::readNWISsite

) you may be able to figure out that the function returns the xth columns from a data frame containing metadata for a USGS site with site number y, but it’s not with the help of the vaguely named arguments. So what would help?

Put the most important argument first. Putting the most important argument first is a useful signal to the user how the function works and what information it modifies or computes. In dplyr::filter()

, having the first argument be the data frame that we are filtering quickly clarifies to the user how this function is going to work.

That may sound obvious, but there are many counter examples all through R. A good example of an R function where the most important object is not first is gsub()

. The gsub() function requires the user to define pattern, replacement, x; in the vector x, the characters in pattern are replaced with the characters in replacement.

gsub(pattern = " ", replacement = "_", x = c("string 1", "string 2"))

#> [1] "string_1" "string_2"

While it is somewhat subjective, x is very likely the most important argument because that is the vector that is being evaluated and manipulated: we are doing something to x. An additional benefit of x going first is that it is more convenient when using the pipe operator (|>

or %>%

) if the object being transformed is first since you don’t have to use a placeholder (_ or ., respectively). For example, dplyr functions (e.g., select()

, mutate()

) all take a data frame as their first argument, so you can pipe them all together in a pipe chain; lm()

takes data as the second argument, so you would have to use a placeholder.

# When the data is the first argument, this works:

mtcars |>

dplyr::select(hp, mpg)

# But when data is not the first argument, you have to use a placeholder:

mtcars |>

lm(hp ~ mpg, data = _)

For further reading on making the most important argument first, see Put the most important arguments first | Tidy design principles .

Optional arguments should have defaults. What is an optional argument? All the arguments in your function need a value, right? From the point of view of a function writer, that is true – all arguments need a value for the function to run. But what about from the function user perspective? Optional arguments are arguments that you don’t necessarily want to require the user to define to make the function work. You may be using default arguments without knowing it already! For example:

dplyr::mutate(mtcars, mpg_hp_ratio = mpg / hp) |>

head(2)

#> mpg cyl disp hp drat wt qsec vs am gear carb mpg_hp_ratio

#> Mazda RX4 21.0 6 160 110 3.90 2.620 16.46 0 1 4 4 0.1909091

#> Mazda RX4 Wag 21.0 6 160 110 3.90 2.875 17.02 0 1 4 4 0.1909091

If we look at the function documentation for dplyr::mutate()

we can see that there are four optional arguments that have defaults (.by, .keep, .before, .after). Since we did not specify any values for these arguments, mutate() assumed the defaults (e.g., it retained all columns from .data because “all” is the default value for .keep).

When we include default values, we should provide some thought to meaningful and intuitive default values. For example, if you use the mean() function, how often are you using the trim argument? Probably not often, because it has a useful a default value, trim = 0. Most of the time when we are using mean() we want the mean of an entire vector of numbers which has not been truncated at the tails; it would be annoying to have to write mean(x, trim = 0) every time we used that function. So the function writers wisely opted to make trim an optional argument by setting a default. You can see the default arguments in the function documentation:

?mean

#> [truncated]

#> mean(x, ...)

#>

#> ## Default S3 method:

#> mean(x, trim = 0, na.rm = FALSE, ...)

#> [truncated]

How do you make a default value for an argument? Let’s look at our hypothetical function. If we wanted to specify that our output data frame should have the “site_no” and “station_nm” columns we would specify the default value by setting the argument equal to the default in the function definition. For example:

fetch_gage_info <- function(y, x = c("site_no", "station_nm")) {

dataRetrieval::readNWISsite(y)[, x]

}

In the case where a user doesn’t specify a value for x, our default will be used. So fetch_gage_info("13340600") is equivalent to running fetch_gage_info("13340600", c("site_no", "station_nm")).

fetch_gage_info("13340600")

#> site_no station_nm

#> 1 13340600 NF CLEARWATER RIVER NR CANYON RANGER STATION ID

Choosing a good default can be deceptively difficult. As a general principle, consider having defaults that don’t tamper with or alter the input data. Using mean() again as an example, trim = 0 is an effective default argument because it doesn’t alter the numeric vector before calculating the mean. When in doubt, favor a lighter-handed default.

Required arguments should not have defaults. Default values are very important in guiding the user experience of a function. However, if you go overboard with the defaults and give a required argument a default, you are potentially setting the user up for a confusing experience. Required arguments are those that would render the function useless if omitted or are unlikely to meet a user’s needs when set to a default. You can think of required arguments as the nouns to which your function/verb is applied while optional arguments are adverbs that modify or qualify that verb.

Consider mean() again. What if the default value for x was x = c(1, 2, 3)? It wouldn’t be very intuitive to run mean() and have the output be 2. A default here isn’t useful and doesn’t make sense. It would make much more sense if instead there was an error telling us that we need some numbers of which to find the mean. Fortunately, the function authors agreed:

# The real default behaviour of `mean()`

mean()

#> Error in mean.default() : argument "x" is missing, with no default

For further reading on default arguments, see Required args shouldn’t have defaults | Tidy design principles .

Argument names should be meaningful. Similar to the function name suggestions above, as a general principle we want our argument names to be descriptive. We want to consider how using the function could be made easier for the user. So we probably want to avoid single letter argument names, like x. In most cases, x doesn’t have any intrinsic meaning (other than it’s a variable) and does not provide any additional information about its function.

So, putting all of these argument name suggestions together, might we suggest the following arguments for our hypothetical function? Does this seem a bit more intuitive?

# Previous version -----

fetch_gage_info <- function(y, x = c("site_no", "station_nm")) {

dataRetrieval::readNWISsite(y)[, x]

}

# New version -----

fetch_gage_info <- function(site_ids, col_names = c("site_no", "station_nm")) {

dataRetrieval::readNWISsite(siteNumbers = site_ids)[, col_names]

}

Return values: Does the output makes sense?

Return only a single object. A function should do one thing (sometimes called “Curly’s law” ); in this case we mean that it should return one object. The benefits of writing single-purpose functions can be subtle, but powerful. By ensuring that a function does one thing, it allows us to (1) make our code more readable and transparent, (2) debug our code quicker, and (3) reuse our function more easily. Sometimes we want to return two objects though. In those cases put them in one object to contain them. If you can (that is, if you have multiple vectors of the same length), package them as columns in a data frame. If that isn’t possible, make them elements in a list. And in either case, name those elements descriptively. You can read more on the this at:

- Returning multiple values | Tidy design principles

- Clean Code: Functions Should Do Exactly One Thing! | Medium

- this helpful StackExchange answer .

Similarly, be careful with side effects in your function. Side effects occur when our function changes the state of our environment. This can be things like changing global options (with options()), removing objects in the global environment (e.g., rm(list=ls())), modifying a file on the disk (like write.csv()), or installing software (like install.packages()). While it is not bad to have our functions have side effects, we should be careful that we are making that the primary operation of our function (i.e., a single function not both return an object and perform a side effect) and that it is happening very explicitly (e.g., by writing progress messages to the console while the function runs).

For example, if your function calls options(), changes are being made to the global environment (i.e., outside of the function) even though it is being called from within a function. This may result in unintended consequences in your code that can be difficult to troubleshoot.

read_some_data <- function(in_file) {

options(stringsAsFactors = FALSE)

read.csv(in_file)

}

Even though options() is being called within the function above, it is changing options outside of the function. Furthermore, these changes are happening implicitly within read_some_data(), so the user wouldn’t know that stringsAsFactors had changed unless they read the function’s source.

You should be careful when writing a function that reaches outside of its own environment to change the user’s global environment or computer. One possible exception is if the primary utility of the function is to perform a side effect and the function’s name effectively describes this side effect (e.g., write.csv()). Either way, it’s good practice to notify the user of the side effect’s actions by writing messages to the console (see the message()

function documentation or cli

R package for more information).

For further reading on side effects, see Side-effect functions should return invisibly | Tidy design principles and Side-effect soup | Tidy design principles .

Lastly, return values should be type stable. This means that the output object type should be constant or easily predictable. A common example

of a function that does not do this well is sapply()

. It is not always clear based on the inputs to sapply() whether you are going to get a vector, a list, or a matrix. The user may be using the output from this function and, if so, will be expecting it in a particular format; therefore, the function must return an object in a predictable manner. For further reading on type-stable functions, see Type-stability | Tidy design principles

.

When to specify argument names

When we call a function we can specify arguments by name, or we can omit the names if we ensure that our arguments are in the correct order. But which one should you use? You've seen both in example code throughout the post. There are no hard and fast rules but we have some suggestions. Mandatory arguments (without defaults), which are typically the data the function is evaluating are usually safe to omit the name because these can easily be assumed. For example, we don't typically write mean(x = 1:10), and instead just write mean(1:10). Explicitly naming arguments is more important for those arguments that modify the computation and/or have defaults. For example, it is a good idea to specify the na.rm = in mean(x = c(1, 5, NA), na.rm = TRUE) because it would be less obvious and more difficult to intuit. There are some good guidelines in 2.3.1 Named argument | The tidyverse style guide

Documentation: Document like others will use it

Documenting your function is probably the most critical step in building a better function. While code can be interpreted by a future user, that can be very time consuming, confusing, and frustrating. There are two ways to comment your code to help a user: (1) in-line comments and (2) function documentation at the beginning.

(1) In-line commenting

While you don’t need to add a comment for every line of code, it is useful to add a comment describing what a chunk of code is doing, or rather, why it is there. For a good explanation of this, see 2.2 Workflow: basics - Comments | R for data science .

In the example below, notice we made a comment “header” , explaining that in the first chunk of code we are performing the site info retrieval and subsetting (further code chunks would have similar headers):

fetch_gage_info <- function(site_ids, col_names = c("site_no", "station_nm")) {

# Retrieve site info and subset columns -----

# If col_names is NULL, don't attempt to subset columns

if(is.null(col_names)) {

warning("No columns were specified in `col_names`. Returning all columns.")

dataRetrieval::readNWISsite(siteNumbers = site_ids)

} else {

dataRetrieval::readNWISsite(siteNumbers = site_ids)[, col_names]

}

}

(2) Function documentation

Another helpful way to document a function is what we’ll call “function-level documentation”. This is writing general documentation that applies to the function as a whole and it contains information that you might see in a function’s help documentation file (e.g., `?paste ). You will want to include a couple pieces of information at a minimum:

- Title: A one-liner that briefly describes what your function does.

- Inputs: Probably the most important piece. What are the arguments in this function, what class should their values be, and what do they do. You should also mention any assumptions you are making about these inputs. If they include a default, a mention of that is useful.

- Outputs: What does the function return. A data frame? A number?

A simple way to implement this would be to just add some comments before you define your function. For example:

# Get site data for specified sites and columns (see

# ?dataRetrieval::readNWISsite for additional details)

#

# `site_ids`: A character vector of USGS site ids.

# `col_names`: A character vector of column names to retain for the output. The

# default is `c("site_no", "station_nm")`. If `NULL` all columns will be

# returned. A value of `NULL` will return the entire data frame.

#

# Output: A data frame containing site information for

# `site_ids` containing only the columns specified in `col_names`

#

fetch_gage_info <- function(site_ids, col_names = c("site_no", "station_nm")) {

...

}

Another slightly fancier way of doing this is using some functionality built into RStudio and made available by the roxygen2 package. This adds a little bit of special syntax to the comments we wrote above that helps to standardize the interpretation by users. While roxygen2 is usually used for creating R package documentation, it helps to provide a standard syntax and format for any function documentation. For example:

#' Get site data for specified sites and columns (see

#' ?dataRetrieval::readNWISsite for additional details)

#'

#' @param site_ids a character vector of USGS site ids, where each site id is at least 8 characters long

#' @param col_names a character vector of column names to retain for the output.

#' The default is `c("site_no", "station_nm")`. If `NULL` all columns will be

#' returned. A value of `NULL` will return the entire data frame.

#'

#' @return A data frame containing site information for sites from subset from

#' `site_ids` containing only the columns specified in `col_names`

We can spot roxygen2 documentation in the source code of many packages. For example, if we look at the source code for dataRetrieval::readWQPdata()

we can see that the first 198 lines are in this format. These lines will then be translated into the readWQPdata.Rd

file which then rendered into the help documentation in R (seen by running ?dataRetrieval::readWQPdata) or into online documentation

.

A keyboard shortcut for adding roxygen2 style comments in RStudio: while your cursor is in your new function’s name in the function definition, type ctrl/cmd+alt+shift+R or click the magic wand icon in the Source pane (usually the top left pane) and then Insert Roxygen Skeleton. That would give us the blank format that we would need to fill in to document our function. There is also guidance on how to fill out this style of documentation at 7 Documentation | The tidyverse style guide

.

Here is the function in all its glory, nicely documented, with good names and arguments.

#' Get site data for specified sites and columns (see

#' ?dataRetrieval::readNWISsite for additional details)

#'

#' @param site_ids a character vector of USGS site ids, where each site id is at least 8 characters long

#' @param col_names a character vector of column names to retain for the output.

#' The default is `c("site_no", "station_nm")`. If `NULL` all columns will be

#' returned. A value of `NULL` will return the entire data frame.

#'

#' @return A data frame containing site information for sites from subset from

#' `site_ids` containing only the columns specified in `col_names`

#'

fetch_gage_info <- function(site_ids, col_names = c("site_no", "station_nm")) {

# Retrieve site info and subset columns -----

# If col_names is NULL, don't attempt to subset columns

if(is.null(col_names)) {

warning("No columns were specified in `col_names`. Returning all columns.")

dataRetrieval::readNWISsite(siteNumbers = site_ids)

} else {

dataRetrieval::readNWISsite(siteNumbers = site_ids)[, col_names]

}

}

Can you imagine how much easier this would be to use than if you were given our original function:

fetch_gage_info <- function(x, y) {

dataRetrieval::readNWISsite(x)[, y]

}

Closing notes

Writing functions can save us a lot of time, but they can also give other users and our future selves a lot of grief if we don’t think through how they’re structured and documented. With some time invested in improving the intuitiveness and usability of your functions, you can save yourself and anyone else who might use your code some time, effort, and gray hairs.

References and further reading

Categories:

Related Posts

Reproducible Data Science in R: Flexible functions using tidy evaluation

December 17, 2024

Overview

This blog post is part of a series that works up from functional programming foundations through the use of the targets R package to create efficient, reproducible data workflows.

Reproducible Data Science in R: Iterate, don't duplicate

July 18, 2024

Overview

This blog post is part of a series that works up from functional programming foundations through the use of the targets R package to create efficient, reproducible data workflows.

Reproducible Data Science in R: Writing functions that work for you

May 14, 2024

Overview

This blog post is part of a series that works up from functional programming foundations through the use of the targets R package to create efficient, reproducible data workflows.

Reproducible Data Science in R: Say the quiet part out loud with assertion tests

September 2, 2025

Overview

This blog post is part of the Reprodicuble data science in R series that works up from functional programming foundations through the use of the targets R package to create efficient, reproducible data workflows.

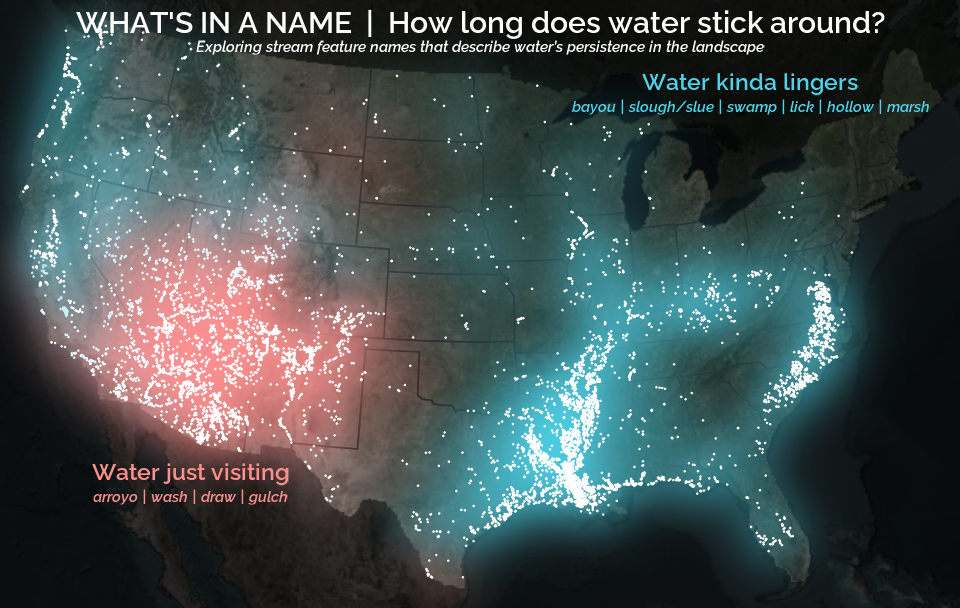

A map that glows with the vocabulary of water

February 27, 2026

English is the official language and authoritative version of all federal information.

A map that glows with the vocabulary of water

What is your first impression of the map above? To me, it is the shimmer. Thousands of points of light, each one a stream or river, illuminating a darkened basemap. Look closely and a pattern emerges: the country’s waterways form a linguistic constellation. These points are classified not from population data or even explicitly by hydrology. They glow strictly according to the vocabulary used to name them and what can be implied about the hydrology of these streams based on their names.